In this new post, and after viewing some cool image distortions, we are going to see several useful image transformations like the horizontal and vertical flips, the rotation (of any pixel channel), the shifting (by wrapping an image around itself or not), the resizing (using the bilinear interpolation), the splitting, the shearing (which looks like a perspective view), the mipmapping and finally, I will present nearly all the existing mirror (reflection) effects.

Horizontal and vertical flips :

The horizontal and vertical flips are based on the famous swap function which consists in inverting the content of 2 elements. If you want to apply a horizontal flip for example, you will have to browse all the height of your image matrix representation and only the half of its width. In the first half of the width, for each row, each pixel will have to be swapped with its opposite pixel located in the 2nd half of the width. The opposite pixel of the 1st one on the 1st row is the last one on this same row, ..., the opposite pixel of the nth one is the (last one - n)th one where n < (width/2). For the vertical flip, the principle is the same but you have to apply this procedure on each column this time and browse all the width of your image matrix representation and only the half of its height (see illustrations above).

Here is a function that can do the 2 flips according to the specified integer n :

Here is a function that can do the 2 flips according to the specified integer n :

Rotation :

Now, if you want to rotate an image by an angle of n (n must be in radians, if n is in degrees, its radian value is (n*4*atan(1))/180 or (n*π)/180), you have to browse your image matrix representation and replace any pixel with coordinates (x,y) by the pixel located at (x',y') (only if x' and y' are inside the image matrix representation) where x' = cos(n)*(x - (width/2)) + sin(n)*(y - (height/2)) + (width/2) and y' = -sin(n)*(x - (width/2)) + cos(n)*(y - (height/2)) + (height/2).

Note that the rotation can be applied from another origin point than the middle of your image, you just have to change the parameters (width/2) and (height/2) in the given formulas and you can also rotate only certain pixel channels of your choice (see the 3 last illustrations).

Note that the rotation can be applied from another origin point than the middle of your image, you just have to change the parameters (width/2) and (height/2) in the given formulas and you can also rotate only certain pixel channels of your choice (see the 3 last illustrations).

Shifting :

The shifting of an image is nearly similar to the previous flips function. In fact, we don't invert the content of 2 elements but just erase one pixel by another. If you want to apply a horizontal shift to the right by a coefficient of xshift for example, you will have to browse your image matrix representation and on each row, the last pixel will have to be replaced by the (last one - xshift)th one, ..., the (last one - n)th pixel will have to be replaced by the (last one - n - xshift)th one. Now, you have to do something with the surface which is not part of your resulting image. You can fill it with a color of your choice like on my illustration on the right or you can wrap your image around itself (left illustration). To wrap your image around itself, you firstly have to save the parts that will disappear due to the shifting, and after the shifting, you can finally display them at the correct location.

Resizing :

Splitting :

As we just saw the resize_down function which creates an image with dimensions equal to the original image ones divided by 2, the splitting function just consists in displaying 4 times the result of the resize_down function on a surface equal to the surface of your original image. Then you are free to continue to split the resulting image the number of time you want (details).

Actually, this transformation helped me to implement my Warhol filter which looks like that (this filter is intended to create an image similar to the tables of Andy Warhol who was an American artist and a leading figure in the visual art movement known as pop art) :

If you want to know how I got this result, you have to split your image, convert it in grey level with the method of your choice (here are the different methods we already saw), then you must posterize this grey level image, it means reduce the number of grey level colors. To do that and as you know the RGB colors go from 0 to 255, you can split this interval into ranges and during the browsing of the image matrix representation, you will have to check the grey level value of the current pixel and set its new grey level value among your reduced color palette (containing only Black, (96,96,96), (160,160,160) and White, for example) depending in which range the current value fall into:

0-64, 65-127, 128-191, 192-255.

0-64, 65-127, 128-191, 192-255.

At this point, your image should look like that :

Let's imagine that you considered 4 ranges like me during the posterization. You can then apply the following procedure to colorize your image : if the grey level value of the current pixel is in the 1st range, the new color is Blue, in the 2nd range, the new color is Magenta, in the 3rd range, the new color is Yellow otherwise the new color is Orange. And as you see, I also applied a color rotation on the 4 thumbnails of Lena so that they are different but you can do all what you want concerning the number of splits, the number of ranges for the posterization, the choice of colors...

Shearing :

In plane geometry, a shear mapping is a linear map that displaces each point in fixed direction, by an amount proportional to its signed distance from a line that is parallel to that direction. In our case, what we will have to do is to move all pixels of an image with coordinates (x,y) to the location (x',y') where

x' = x + (xshift * y) - (width * xshift)/2 and y' = y + (yshift * x) - (height * yshift)/2.

Note that x', y' must be inside the image matrix representation and xshift, yshift are floating point numbers representing a horizontal and a vertical shifting coefficient of your choice between -1 and 1.

x' = x + (xshift * y) - (width * xshift)/2 and y' = y + (yshift * x) - (height * yshift)/2.

Note that x', y' must be inside the image matrix representation and xshift, yshift are floating point numbers representing a horizontal and a vertical shifting coefficient of your choice between -1 and 1.

Mipmapping :

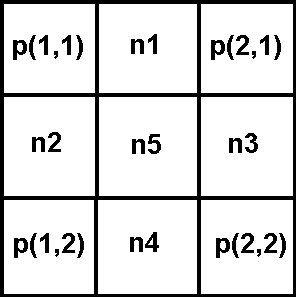

In 3D computer graphics, mipmaps (also MIP maps) are pre-calculated, optimized collections of images that accompany a main texture, intended to increase rendering speed and reduce aliasing artifacts. They are widely used in 3D computer games, flight simulators and other 3D imaging systems for texture filtering. Their use is known as mipmapping. Our goal here is to generate all the existing mipmaps of an image using the previous resize_down function and display them on a surface which has the same dimensions than the original image ones. If these dimensions are widthxheight, the dimensions of the first mipmap will be (width/2)x(height/2) (on the right of the illustration), ..., the dimensions of the nth mipmap will be (width/2n)x(height/2n) where 2n < width.

Note that the way the mipmaps are displayed can obviously be changed (details).

Note that the way the mipmaps are displayed can obviously be changed (details).

Mirror effects :

As you see, it exists many mirror effects depending on the result you want to get (I named the 3rd one "The Mussel"). The positive point is that the procedure to follow is always the same and is really easy. You have to get the half of an image in a matrix. It can be the right part, the left one, the top one or the bottom one. Now there are 2 possibilities of mirror effects. On one hand, the part you chose can remain identical and so you will have to replace the opposite part by the reverse of your selection using the previous flips function. On the other hand, the part you chose must replace the opposite part and be replaced by its reverse using again the flips function. Source code example :

. To get this effect, it's very easy. When you have chosen an image (img1), you have to create a new image (img2) which corresponds to a horizontal shift of img1 (don't make a too big horizontal shift). Then you can create your anaglyph which has the particularity that all its

. To get this effect, it's very easy. When you have chosen an image (img1), you have to create a new image (img2) which corresponds to a horizontal shift of img1 (don't make a too big horizontal shift). Then you can create your anaglyph which has the particularity that all its